Early troubles with Heterogeneous Computing

A very interesting article on macOS’s M1-CPU core management made the front page of HackerNews today. For those who are wondering what heterogeneous computing is, here’s an intro from the article itself:

In conventional multi-core processors, like the Intel CPUs used in previous Mac models, all cores are the same. Allocating threads to cores is therefore a matter of balancing their load, in what’s termed symmetric multiprocessing (SMP). CPUs in Apple Silicon chips are different, as they contain two different core types, one designed for high performance (Performance, P or Firestorm cores), the other for energy efficiency (Efficiency, E or Icestorm cores).

Why ship different cores in processors?

The reasoning seems pretty simple: not every application out there requires a powerful processor core. So let’s ship a bunch of low-powered cores and then figure out which program should run on low-powered and which programs to run on high-powered ones (based on a bunch of data points). It’ll result in no experience degradation for the user (provided its done properly) and better battery savings, so its also good for the environment. Easy-peasy right?

The Choice

Venturing further into the very next line, we find the choice:

For these to work well, threads need to be allocated by core type, a task which can be left to apps and processes, as it is in Asahi Linux, or managed by the operating system, as it is in macOS.

- The Red Pill: I’ll diverge a bit from Morpheus’s dialogue and open up from the red pill first. Asahi Linux, and by extension many Linux distros, due to their very open nature, allow the developers and users to tinker with almost everything under the hood and decide what’s best for themselves. Wanna run everything on the P-cores? You got it. Want better battery efficiency? Do whatever you want.

-

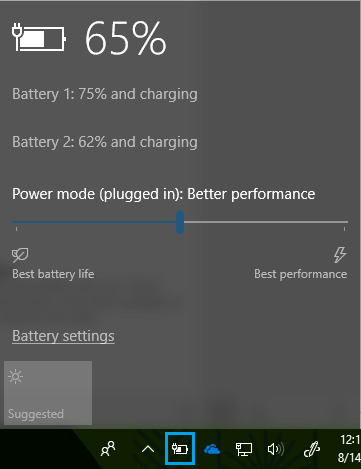

The Blue Pill: Stay in the Matrix. Let the OS manage such complicated stuff. In a battery-powered device, the OS needs to continuously make a choice between delivering a good battery life and delivering a good performance. The users and developers are mostly unaware of what’s under the hood, being presented with very limited options (read APIs) to fine-tune their apps. It has been doing a decent job so far for SMP processors. Remember the performance power slider in Windows? That’s all you’ll ever need with SMP.

Blue pill approach is what almost every commercial OS does: Apple’s OSes, Windows and almost all smartphone OSes out there have been doing it for a while. They need to vouch for both battery life and performance after all. Things get tricky, and interesting for heterogeneous computing. Here’s where the race to the bottom starts.

Asymmetric multiprocessing ft. Apple

Apple clearly seems to be prioritizing the battery efficiency throughout its mobile devices, be it the iPhone, iPad or the MacBook line.

-

iOS/iPadOS: Being mobile OSes and running on devices with low capacity batteries, these OSes (need to) take drastic measures, including but not limited to the following:

-

Asking developers to choose background strategies for their apps: Obviously, this is a necessary thing. Some of the things you’ll read further are done by Android as well. Read this bit of Apple’s Developer Documentation:

The system may place apps in the background at any time. If your app performs critical work that must continue while it runs in the background, use

beginBackgroundTask(withName:expirationHandler:)to alert the system. Consider this approach if your app needs to finish sending a message or complete saving a file.The system grants your app a limited amount of time to perform its work once it enters the background. Don’t exceed this time, and use the expiration handler to cover the case where the time has depleted to cancel or defer the work.

-

Background downloads in iOS: Here’s where things take a drastic turn. While background downloads are possible in iOS for conventional protocols using

URLSession, things get rough when you wish to have a custom protocol, like BitTorrent. While a torrent client can technically be built for iOS/iPadOS using the Network framework, here are the limitations:- It must always be in the foreground.

- If the app goes in the background, it’ll be suspended/terminated and the torrent client loses its connection. To get around this limitation, your app will need to play music in the background. Obviously, this is a terrible design, not to mention, might actually consume a lot of battery.

- If you wanna avoid both of the above limitations, jailbreak iOS/iPadOS and get a background apps tweak.

-

-

macOS: I’ll quote the article again:

Unlike Asahi Linux, macOS doesn’t provide direct access to cores, core types, or clusters, at least not in public APIs. Instead, these are normally managed through Grand Central Dispatch using Quality of Service (QoS) settings, which macOS then uses to determine thread management policies.

Threads with the lowest QoS will only be run on the E cluster, while those with higher QoS can be assigned to either E or P clusters. The latter behaviour can be modified dynamically by the

taskpolicycommand tool, or by thesetpriority()function in code. Those can constrain higher QoS threads to execution only on E cores, or on either E or P cores. However, they cannot alter the rule that lowest QoS threads are only executed on the E cluster.macOS itself adopts a strategy where most, if not all, of its background tasks are run at lowest QoS.

The article points out a flaw in macOS’s Archive utility:

if you download a copy of Xcode in xip format, decompressing that takes a long time as much of the code is constrained to the E cores, and there’s no way to change that.

And here’s some clarification from a HN user in response to the above claim:

If you decompress something with Archive Utility, you’ll see that it does spend some time on all the cores, although it’s definitely not using them equally. It’s just that it doesn’t spend very much time on them, because it does not parallelize tasks very well, so most of the time it will be effectively running on 1-2 threads with the other cores idle, which macOS will split over the efficiency cores and perhaps bring in one of the P-core clusters if it likes.

This simply translates to: its a shot in the dark for macOS. Yikes!

So it seems that its a race that’s been going on for quite sometime at Apple. And they’re running from both ends now - on iOS, they are moving from a OS design that gives good power savings to one that allows for general-purpose computing in general sense of the word. On macOS, the challenge is to move from general-purpose computing to a design that incorporates good power savings.

Asymmetric multiprocessing ft. Intel

Intel, for all its troubles with its fabrication processes and following the BIG-LITTLE wagon, is now venturing into this space now with its Lakefield and Alder Lake chips. Intel’s Thread Director is a hardware level thread analyzer and scheduler that “provides runtime feedback” to the OS for optimal scheduling decisions with any workload. It also adapts to thermals, as well as load and power requirements on the fly, and works in conjunction with Windows 11 (also Linux kernel 5.18 onwards). Based on this article at Anandtech, here are the parameters Intel will consider:

- ratio of loads, stores, branches, average memory access times, patterns

- Types of instructions: It monitors which instructions are power hungry, such as AVX-VNNI (for machine learning) or other AVX2 commands that often draw high power, and put a big flag on those for the OS for prioritization

- Frequency: If a thread is limited in a way other than frequency, it can detect this and reduce frequency, voltage, and power.

The same article quotes:

a thread will initially get scheduled on a P-core unless they are full, then it goes to an E-core until the scheduler determines what the thread needs, then the OS can be guided to upgrade the thread. In power limited scenarios, such as being on battery, a thread may start on the E-core anyway even if the P-cores are free.

Asymmetric multiprocessing ft. OnePlus

I have to admit, being a OnePlus 6 owner, I added this one for the lulz. Sometime ago, OnePlus admitted to throttling some popular apps on the OnePlus 9 series devices in the interest of getting more power out of the battery.

Why make a bunch of algorithms to guess user experience, when you can simply (and quite accurately, though subjectively) test the user experience manually and choose one out of the 3 positions on the power slider per app: battery, balanced, performance. Quite the approach ![]() .

.

But OnePlus somehow in all its wisdom, often kills my Alarm app. And I need my Alarm to wake up.

Conclusion

Its humans vs. machines all over again (another Matrix reference). Although, the former is better (yet subjective), the latter is more scalable. The answer to the problem however, lies in a sweet spot somewhere in between.