Fizz Buzz Pro on Elixir

This post is inspired by the following StackExchange CodeGolf thread. For the uninitiated, Fizz Buzz is the programming equivalent of a “Hello World” - a simple task used in job interviews to filter out people who can’t code their way out of a paper bag.

The challenge:

Write a program that prints numbers from 1 to a very high number. For multiples of 3, print “Fizz” instead. For multiples of 5, print “Buzz”. For multiples of both, print “FizzBuzz”.

The output must go on forever (or at least to 2^58) without halting prematurely.

Simple, right? A 2-minute problem at most.

I spent 2 weekends on this.

The baseline: C does it easily

Here’s your standard C implementation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

#include <stdio.h>

int main() {

for (int i = 1; i < 1000000000; i++) {

if ((i % 3 == 0) && (i % 5 == 0)) {

printf("FizzBuzz\n");

} else if (i % 3 == 0) {

printf("Fizz\n");

} else if (i % 5 == 0) {

printf("Buzz\n");

} else {

printf("%d\n", i);

}

}

}

Compile it, run it, done. 65-70 MiB/s on my 2019 Intel MacBook Pro.

“This should be easy to port to Elixir,” I thought, like a fool.

The humbling: Elixir’s first attempt

1

2

3

4

5

6

7

8

9

10

11

defp reply(n) do

case {rem(n, 3), rem(n, 5)} do

{0, 0} -> "FizzBuzz\n"

{0, _} -> "Fizz\n"

{_, 0} -> "Buzz\n"

{_, _} -> "#{n}\n"

end

end

Stream.map(lower..upper, &reply/1)

|> Enum.into(IO.binstream)

To measure throughput:

1

2

MIX_ENV=prod mix escript.build

./fizzbuzz 1 1000000000 | pv > /dev/null

Result: 2-3 MiB/s.

Two. To. Three.

That’s not a typo. The Elixir version was running at roughly 3% of the C version’s speed. For a counting game.

And thus began my descent into madness.

Optimization #1: Stop screaming into the void one number at a time

The obvious problem: we’re making an IO call for every single number. A billion numbers means a billion IO calls. Each IO call has overhead. We’re basically knocking on the operating system’s door a billion times asking “can you print this one number please?”

The OS, understandably, is not amused.

Solution: batch the output into chunks.

1

2

3

Stream.map(lower..upper, &reply/1)

|> Stream.chunk_every(6000)

|> Enum.into(IO.binstream)

Instead of printing one number at a time, we now collect 6000 results and print them all at once.

Result: 18 MiB/s

We just 6x’d our performance by adding one line. This is the kind of win that makes you feel smart for about 30 seconds before you realize you’re still 4x slower than C.

Optimization #2: Put those cores to work

Modern computers have multiple cores. My laptop has 12 threads. Currently, 11 of them are sitting idle watching one core do all the FizzBuzz work.

The plan: divide the input range into chunks, process them in parallel, then print results in order.

For input 1..1000, we create sub-ranges: [1..100, 101..200, 201..300, ..., 901..1000]

1

2

3

4

5

6

7

8

9

10

11

defp get_input_ranges(lower, upper, chunk_size) do

if chunk_size >= 10 do

if lower >= upper do

[]

else

[lower..min(lower+chunk_size, upper) | get_input_ranges(min(lower+chunk_size, upper) + 1, upper, chunk_size)]

end

else

[lower..upper]

end

end

But what’s the right chunk size?

Too small: We spin up thousands of tasks that do almost nothing. The overhead of managing tasks exceeds the work itself.

Too big: Each task holds its results in memory while waiting to print. Giant chunks = giant memory usage.

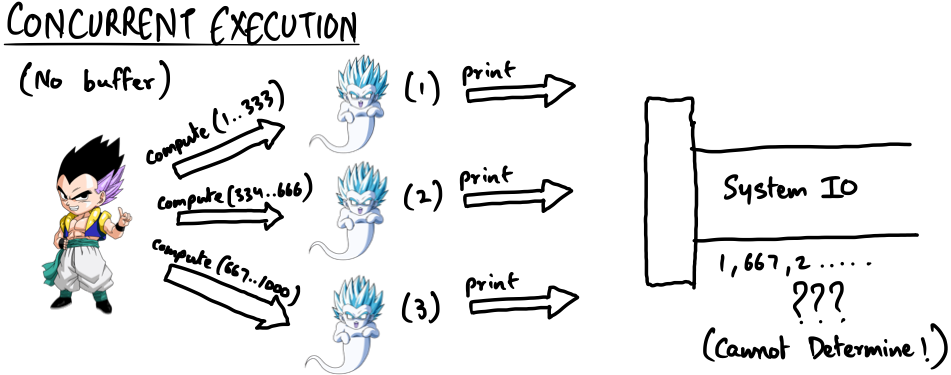

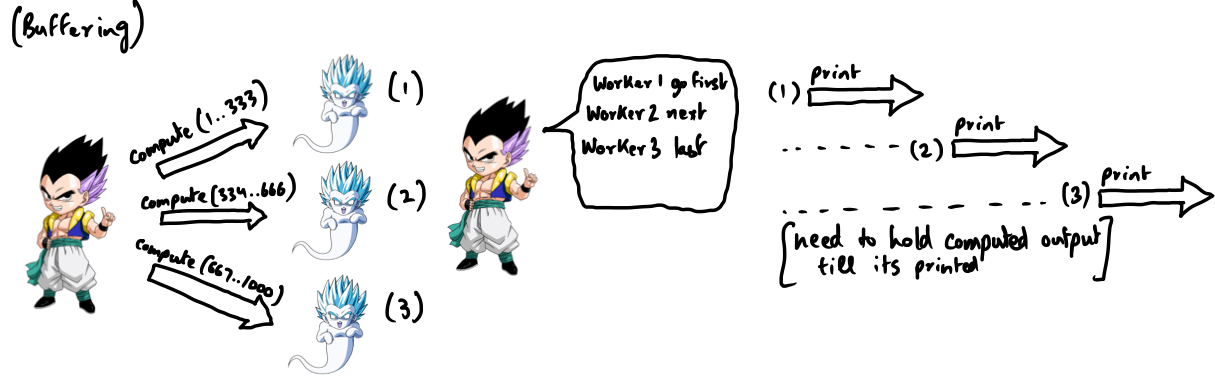

Here’s the thing about concurrency: a task can’t just print whenever it wants. Task #47 has to wait for tasks #1-46 to print first, or we’ll get FizzBuzz soup. So every task sits there, holding its results hostage, waiting for its turn.

FIGURE 2: Concurrent execution without buffering - chaos

FIGURE 2: Concurrent execution without buffering - chaos

FIGURE 3: Concurrent execution with buffering - controlled chaos

FIGURE 3: Concurrent execution with buffering - controlled chaos

The magic formula I landed on:

1

chunk_size = min(div(upper - lower, System.schedulers_online), 6000)

This gives us chunks that are:

- Small enough to not explode memory

- Big enough to be worth the task overhead

- Conveniently sized for IO batching (remember our 6000-item chunks from earlier?)

Optimization #3: Task.async_stream to the rescue

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

defmodule Fizzbuzz do

def fizzbuzz(enumerable) do

Stream.map(enumerable, &reply/1)

|> Stream.chunk_every(6000)

|> Enum.into([])

end

end

defmodule Fizzbuzz.Cli do

def main([lower, upper]) do

{lower, upper} = {String.to_integer(lower), String.to_integer(upper)}

chunk_size = min(div(upper - lower, System.schedulers_online), 5000)

input_enumerable = get_input_ranges(lower, upper, chunk_size)

stream = Task.async_stream(input_enumerable,

fn range -> Fizzbuzz.fizzbuzz(range) end,

timeout: :infinity)

Enum.reduce(stream, :ok,

fn {:ok, res}, _acc -> res |> Enum.into(IO.binstream) end

)

end

end

Quick note on iolists: In Elixir, concatenating lists is expensive. But we can cheat using iolists - special lists that can contain strings, bytes, or other iolists. IO operations flatten them automatically. It’s like telling the printer “here’s a box of boxes of papers” and it just figures it out.

Result: 40 MiB/s

We’re now faster than half of C. Progress!

Optimization #4: GenServer over Task (reduce data ping-pong)

Here’s the problem with Task: it computes results, sends them back to the main process, which then sends them to the IO process. That’s two hops for every chunk of data.

1

Worker Task → Main Process → IO Process

What if workers could talk directly to IO?

With GenServer, we can tell a worker “compute now, print later” - and when we say print, it sends directly to IO. One hop instead of two.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

defmodule Fizzbuzz.Worker do

use GenServer

def init([range]) do

send(self(), {:calculate, range})

{:ok, []}

end

def handle_info({:calculate, range}, _state) do

res = Fizzbuzz.fizzbuzz(range)

{:noreply, res}

end

def handle_call(:print, _from, results) do

results |> Enum.into(IO.binstream)

{:reply, :ok, []}

end

end

The driver spawns workers, waits for them to compute, then tells them to print in order:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

def main([lower, upper]) do

{lower, upper} = {String.to_integer(lower), String.to_integer(upper)}

chunk_size = min(div(upper - lower, System.schedulers_online), 6000)

input_enumerable = get_input_ranges(lower, upper, chunk_size)

Task.async_stream(input_enumerable,

fn input -> elem(GenServer.start_link(Fizzbuzz.Worker, [input]), 1) end,

timeout: :infinity)

|> Stream.map(fn {:ok, res} -> res end)

|> Stream.each(fn pid ->

GenServer.call(pid, :print)

Process.exit(pid, :kill) # You've served your purpose. Goodbye.

end)

|> Stream.run()

end

Result: 80 MiB/s

We’ve now surpassed C. But we’re just getting started.

Optimization #5: String interpolation is secretly expensive

Look at this innocent line:

1

{_, _} -> "#{n}\n" # <- This guy right here

String interpolation creates a new string every time. For a billion numbers, that’s a billion string allocations. We can use iolists instead:

1

2

3

4

5

6

7

8

defp reply(n) do

case {rem(n, 3), rem(n, 5)} do

{0, 0} -> "FizzBuzz\n"

{0, _} -> "Fizz\n"

{_, 0} -> "Buzz\n"

{_, _} -> [Integer.to_string(n), "\n"] # iolist, not string

end

end

“This can’t possibly matter that much,” I thought.

Result: 150 MiB/s

It mattered. Almost 2x improvement from changing one line. The reply function gets called a lot.

Optimization #6: Don’t materialize 48 trillion elements

The problem statement says we need to handle up to 2^58 numbers. Let’s try it:

1

./fizzbuzz 1 288230376151711744

The program hangs.

Why? Our get_input_ranges function returns a list. For 2^58 with 6000-sized chunks, that’s 48 trillion list elements. We’d need 48,000+ GB of RAM just to store the list of ranges, let alone process them.

Solution: streams. Generate ranges lazily, one at a time.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

defmodule ChunkRangeStream do

def create(range, chunk_size) do

Stream.resource(

fn -> initialize(range, chunk_size) end,

&generate_next_value/1,

&done/1

)

end

defp initialize(range, chunk_size), do: {range, chunk_size, range.first}

defp generate_next_value({range, chunk_size, lower}) do

if lower < range.last do

next_range = lower..min(lower+chunk_size, range.last)

new_lower = min(range.last, lower+chunk_size+1)

{[next_range], {range, chunk_size, new_lower}}

else

{:halt, {range, chunk_size, lower}}

end

end

defp done(_), do: nil

end

Now we generate ranges on-demand. Memory crisis averted.

Optimization #7: Exploit the pattern

Here’s a shower thought: FizzBuzz has a period of 15.

1

1, 2, Fizz, 4, Buzz, Fizz, 7, 8, Fizz, Buzz, 11, Fizz, 13, 14, FizzBuzz

Then it repeats, just with bigger numbers. We can hardcode the pattern and just fill in the numbers:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

def fizzbuzz6k(range) do

i = range.first - 1

0..399

|> Stream.map(fn j ->

[[Integer.to_string(15*j + 1 + i), "\n"],

[Integer.to_string(15*j + 2 + i), "\n"],

"Fizz\n",

[Integer.to_string(15*j + 4 + i), "\n"],

"Buzz\nFizz\n",

[Integer.to_string(15*j + 7 + i), "\n"],

[Integer.to_string(15*j + 8 + i), "\n"],

"Fizz\nBuzz\n",

[Integer.to_string(15*j + 11 + i), "\n"],

"Fizz\n",

[Integer.to_string(15*j + 13 + i), "\n"],

[Integer.to_string(15*j + 14 + i), "\n"],

"FizzBuzz\n"]

end)

|> Enum.chunk_every(400)

end

No more modulo operations. No more conditionals. Just math and string conversion.

For this to work, we need to split our input into three parts:

- Beginning: Numbers before the first multiple of 15 (processed normally)

- Middle: The bulk of the work, in 6000-number chunks (processed with the pattern)

- End: Leftover numbers (processed normally)

Result: 175 MiB/s

Optimization #8: Stop copying gigabytes of data

In Erlang/Elixir, messages between processes are copied. Our GenServer computes an iolist, sends it to IO process = one full copy.

But there’s an exception: refc binaries (strings > 64 bytes) are passed by reference, not copied.

So if we convert our iolist to a binary before sending…

1

2

3

4

5

6

7

8

9

def handle_info({:calculate, range}, _state) do

res = Fizzbuzz.fizzbuzz(range)

{:noreply, res |> :erlang.iolist_to_binary} # Convert to binary

end

def handle_call(:print, _from, results) do

IO.binwrite(results) # Now passing a reference, not copying

{:reply, :ok, []}

end

For a billion numbers, that’s 166,666 chunks. Each chunk was being fully copied. Now it’s just passing pointers.

Result: 230 MiB/s

Since IPC is no longer the bottleneck, we can now use bigger chunks and pass even more data per worker.

Result: 300+ MiB/s

Optimization #9: Erlang’s IO has too many features

Erlang’s standard IO is designed for distributed systems. When you call IO.puts, it:

- Ensures atomic ordering (no interleaved output from concurrent calls)

- Forwards to a “group leader” process (for distributed environments)

- Handles all sorts of edge cases we don’t care about

We’re not distributed. We’re not concurrent on IO. We just want to blast bytes to stdout.

Enter: ports. Direct communication with the OS, no middleman.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# Open a port to stdout

port = :erlang.open_port({:fd, 0, 1}, [:binary, :out])

# Hand control to a worker

send(port, {self(), {:connect, worker_pid}})

# Worker writes directly

send(port, {self(), {:command, results}})

# Hand control back to main

send(port, {self(), {:connect, main_pid}})

# Close when done

send(port, {self(), :close})

Result: 550+ MiB/s

We’ve removed IO as the bottleneck. The only limit now is how fast we can generate numbers.

Optimization #10: When in doubt, Rust

At this point, I’d squeezed everything I could from pure Elixir. Time to bring in the heavy artillery.

Using Rustler, I ported the number-crunching to Rust and called it as a NIF (Native Implemented Function).

Result: 3 GiB/s

That’s a 6x improvement just from recompiling the hot path in Rust. The Elixir code still handles orchestration, concurrency, and IO - Rust just does the math faster.

The scoreboard

| Optimization | Throughput | Improvement |

|---|---|---|

| Naive Elixir | 2-3 MiB/s | Baseline |

| Chunked IO | 18 MiB/s | 6x |

| Parallel tasks | 40 MiB/s | 2x |

| GenServer | 80 MiB/s | 2x |

| Iolist optimization | 150 MiB/s | 1.9x |

| Pattern exploitation | 175 MiB/s | 1.2x |

| Refc binaries | 300+ MiB/s | 1.7x |

| Raw ports | 550+ MiB/s | 1.8x |

| Rust NIF | 3 GiB/s | 5.5x |

Total improvement: ~1000x over naive Elixir. ~46x over naive C.

All for a children’s counting game.

What I learned

- IO is usually the bottleneck. Batch your writes.

- Concurrency helps, but coordination costs. Choose your chunk sizes wisely.

- Data copying is expensive. Use refc binaries when passing large data between processes.

- Standard library IO is feature-rich. Sometimes you just need a raw pipe.

- Know when to drop to native code. Elixir is great for orchestration; Rust is great for math.

- Premature optimization is the root of all evil, but mature optimization is pretty fun.

Was any of this practical? Absolutely not. Would I do it again? In a heartbeat.

Code is available on GitHub.

Credits

All the amazing folks at ElixirForum who answered my increasingly unhinged questions: https://elixirforum.com/t/question-regarding-improving-fizzbuzz-io-performance/56816